Anthropic and OpenAI updates

The Inference

Your weekly AI roundup. Models, security, tooling.

Claude Gets a Sonnet, Gemini Gets Sharper, and Everyone Gets a Little More Secure

Author: Nidhi Vichare

Date: February 20, 2026

Read time: ~5 min

TL;DR

Anthropic: Claude Sonnet 4.6: Opus-level performance at Sonnet pricing, 1M context window in beta, same price as 4.5.

Google: Gemini 3.1 Pro in preview; reasoning scores on ARC-AGI-2 more than double 3 Pro.

OpenAI: Lockdown Mode and Elevated Risk labels to guard against prompt injection.

Microsoft: Four purpose-built AI agents (Debugger, Profiler, Test, Modernize) inside Visual Studio.

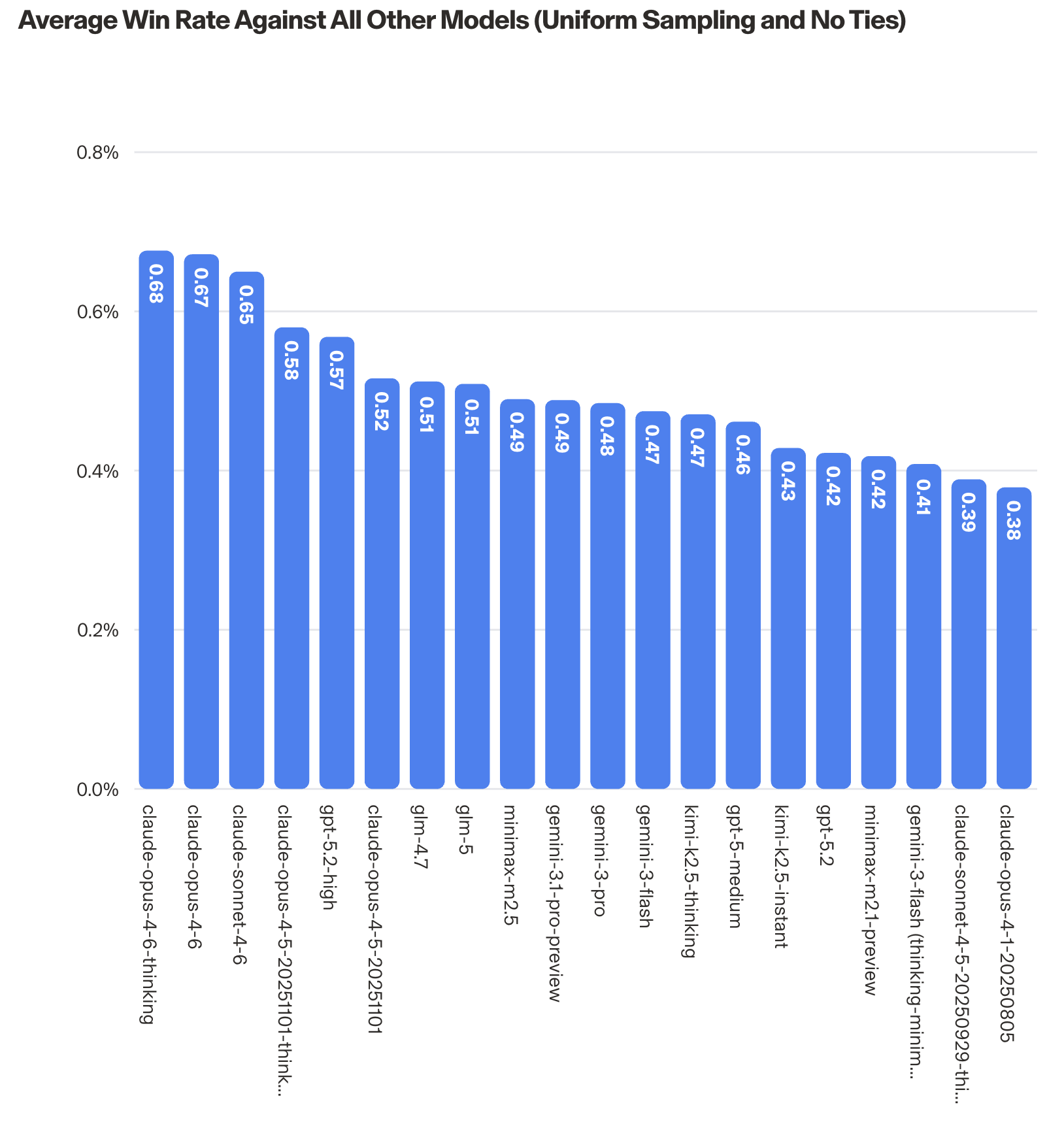

Credit: arena.ai, results as of February 20, 2026.

🤖 Anthropic Releases Claude Sonnet 4.6

Anthropic has officially launched Claude Sonnet 4.6, and it arrives with a meaningful set of upgrades. The new model sharpens skills across coding, computer use, long-context reasoning, agent planning, knowledge work, and design, making it a genuinely well-rounded upgrade rather than a narrow performance bump.

Perhaps the most telling signal of Anthropic's confidence: Sonnet 4.6 is now the default model powering both claude.ai and Claude Cowork. For developers, it ships with a 1-million token context window in beta and carries the same pricing as its predecessor, Sonnet 4.5, at $3 per million input tokens and $15 per million output tokens.

"Performance that would have previously required reaching for an Opus-class model, including on real-world, economically valuable office tasks, is now available with Sonnet 4.6. The model also shows a major improvement in computer use skills compared to prior Sonnet models." Anthropic

Bottom line: Opus-level performance at Sonnet pricing. That's a significant value shift for anyone building or working with Claude at scale.

✨ Gemini 3.1 Pro Now Available in Preview

Google is opening up Gemini 3.1 Pro to developers in preview, rolling it out across the Gemini API in Google AI Studio, Gemini CLI, Google Antigravity, and Android Studio. It's also accessible via Vertex AI, Gemini Enterprise, the Gemini app, and NotebookLM.

The headline claim? A major leap in core reasoning ability. Google points to its performance on ARC-AGI-2, a benchmark designed to evaluate how well a model handles entirely novel logic patterns. There, 3.1 Pro achieved a verified score of 77.1%, more than double the score of its predecessor, 3 Pro.

"Building on the Gemini 3 series, 3.1 Pro represents a step forward in core reasoning. 3.1 Pro is a smarter, more capable baseline for complex problem-solving." Google

Why it matters: Doubling reasoning on a rigorous logic benchmark is no small feat. If the gains hold up in real-world workflows, 3.1 Pro could meaningfully shift how teams choose models for complex problem-solving.

🔒 OpenAI Adds Lockdown Mode and Elevated Risk Labels to ChatGPT

OpenAI is taking aim at a growing class of security threats with two new features designed to reduce the risk of prompt injection attacks, the kind where malicious content embedded in external data tries to hijack an AI's behavior.

Lockdown Mode restricts how ChatGPT interacts with external systems, significantly narrowing the surface area for data exfiltration via prompt injection. Alongside it, Elevated Risk labels will now appear on certain product surfaces to give users a heads-up when a specific feature introduces additional exposure.

A concrete example: developers can grant Codex network access so it can look up documentation online. That's useful, but it also opens a potential attack vector. The new label surfaces that tradeoff explicitly, rather than leaving it implied. For now, the labels appear in ChatGPT, ChatGPT Atlas, and Codex.

Insight: Security is moving from afterthought to first-class in AI product design. Labels make the risk visible where it happens.

🛠️ Microsoft Builds a Suite of Pre-Built Agents for Visual Studio

Microsoft is embedding a new wave of AI assistance directly into Visual Studio, with a suite of pre-built agents tailored to specific developer workflows. Rather than a generic assistant, each agent is purpose-built around what developers actually spend time on.

The lineup includes four agents:

- Debugger: uses call stacks, variable state, and diagnostic tools to work through errors

- Profiler: identifies bottlenecks and suggests optimizations

- Test: generates unit tests

- Modernize: handles framework and dependency upgrades

"Each preset agent is designed around a specific developer workflow and integrates with Visual Studio's native tooling in ways that a generic assistant can't." Microsoft

Developers can access these agents through the Visual Studio chat panel via the agent picker or by typing @. The integration with native tooling is the key differentiator: these aren't chat wrappers bolted on top; they're built to operate within the IDE's actual context.

Takeaway: IDE-native agents (debug, profile, test, modernize) in one place. Less context-switching, more flow.

More from the ecosystem

🕸️ GraphRAG Brings Context and Verifiability to LLM Responses

Standard retrieval-augmented generation has a fundamental limitation: it flattens your data. When you chunk content into vectors, you can surface similar text, but you lose the relationships, hierarchies, and business logic that give that text meaning. Graphwise is tackling this head-on with a new GraphRAG offering that sits as a semantic layer on top of knowledge graphs, giving LLMs a richer foundation to reason from.

The practical difference matters. With conventional RAG, it's often unclear how a model arrived at its answer or which sources it actually drew on. Graphwise's approach is designed to make every step of the pipeline inspectable, with responses grounded in both documents and graph entities, so you're not just getting an answer, you're getting one you can trace and verify.

Under the hood, the system blends three retrieval strategies: knowledge graph queries, vector search, and full-text search for keyword-driven discovery. It also takes a knowledge-model-driven approach to understanding user intent, enriching queries with taxonomy or ontology concepts and building a graph representation of the question before retrieval even begins. The result: a system that understands the shape of what's being asked, not just relevant chunks.

Insight: For enterprises where data relationships are complex and answer accountability matters, GraphRAG is a meaningful step beyond standard RAG.

🛡️ Checkmarx Brings Agentic Security into the AWS Kiro IDE

Security that lives outside the development environment is security that gets skipped. Checkmarx is working to close that gap with a new integration that embeds its Developer Assist tool directly into AWS Kiro, the recently launched IDE from Amazon.

Once activated and authenticated, the tool continuously analyzes source code and dependencies across the active workspace without requiring developers to context-switch into a separate platform. Security findings surface automatically inside the IDE, paired with contextual information to help developers understand and address issues while the code is still fresh. For teams and stakeholders who need a broader view, the same data is available in the Checkmarx One platform, giving leadership visibility into project-level risk.

The timing is notable. As AI-assisted coding accelerates the pace at which code is written, the window for catching security issues before they ship is getting shorter. Embedding security analysis directly into the agentic development environment catches vulnerabilities at the moment of creation rather than downstream in a separate review cycle.

Why it matters: Security in the IDE, at write-time. Fewer context switches, faster feedback, and leadership still gets project-level visibility in Checkmarx One.

🗄️ Quest's Trusted Data Management Platform Streamlines AI-Ready Data

Getting data into a state that's actually fit for AI isn't a weekend project. Quest Software is addressing that friction with its new Trusted Data Management Platform, a unified solution that brings together data modeling, cataloging, governance, quality management, and a data marketplace under one roof.

The problem it's solving is one many organizations know well. As Quest put it: building trusted, AI-ready data products can take up to six months, and under that kind of pressure, teams cut corners. Metadata gets skipped, governance workflows get bypassed, data quality checks get ignored, and every department ends up maintaining its own version of a data product. The result: a fragmented, siloed landscape where the data powering business decisions is difficult to trust.

The platform's standout capability is its Automated Data Product Factory, which uses generative AI to let business users create data products from natural language prompts. The goal is to dramatically shorten design cycles and lower delivery costs, while opening up data product creation to people who aren't data engineers. Rather than waiting months for IT, teams can generate AI-ready data products on demand, with governance baked in from the start.

Bottom line: One platform for modeling, catalog, governance, and quality. Plus natural-language data products with governance built in. Aimed at shortening months-long cycles.

The Bigger Picture

This week's releases paint a clear picture of where the industry is heading: better models at lower price points, sharper reasoning benchmarks, tighter security guardrails, smarter data infrastructure, and AI that fits more naturally into the tools developers already use. Whether it's GraphRAG making LLM answers auditable, Checkmarx catching vulnerabilities in real time, or Quest compressing six-month data projects into natural language prompts, the theme is the same. The ecosystem isn't just getting more powerful; it's getting more trustworthy. The pace isn't slowing. If anything, the week-over-week release cadence is becoming the new normal.

Keep building. We'll keep tracking.

The Inference